Mass analyzing the structure of inbound EDIFACT messages

We want to increase the quality and unify the structure of our inbound EDIFACT DESADV messages from our suppliers. So far we had no guideline to hand out so we started to analyze the current messages to gain knowledge what information is currently provided. It is a bit of hard work but I was curious if I could somehow automate some of the steps. Others do crosswords for fun and these kind of tasks urge me.

Gather Files

Simple: Just copy tens of thousands of inbound messages from your archive to your local machine.

Complex: I was running the analyzing conversion (locally) for ~10 hours and was able to gather data of ~15k files. To be sure to have at least some data for all our suppliers I used a PowerShell script to get the latest n files from every archive folder.

$path = "X:\path\to\your\archive\suppliers\inbound\"

$directories = Get-ChildItem $path -Recurse -Filter *DESADV* -Directory

foreach ($directory in $directories)

{

Get-ChildItem $directory.FullName -Filter "*.received" | sort LastWriteTime | select -last 5 | % { Copy-Item $_.FullName -Destination "C:\some\local\path\to\collect\files" }

}Create a universal Mapping

This was the most "boring" part as I had to read every segment and field in the source message and copy the value into an easy structure for analyzing and the DESADV EDIFACT structure has over 800 fields and I wanted to have the option to check for every segment, even if I'm sure it's not used.

I used the Seeburger BIC Mapping Designer and created a new mapping with source structure EDIFACT DESADV and a non specific CSV structure that has just one line and a hundred fields per line.

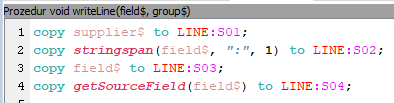

I used a small procedure so I could create the output with just one call per field.

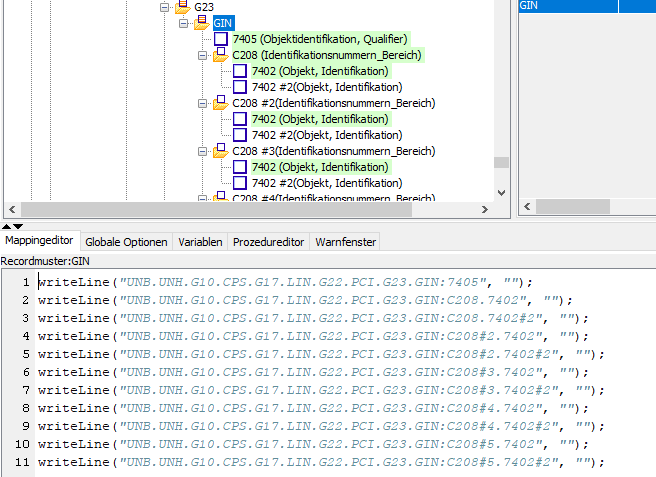

Then I had to add one call for every god damn field in every source record.

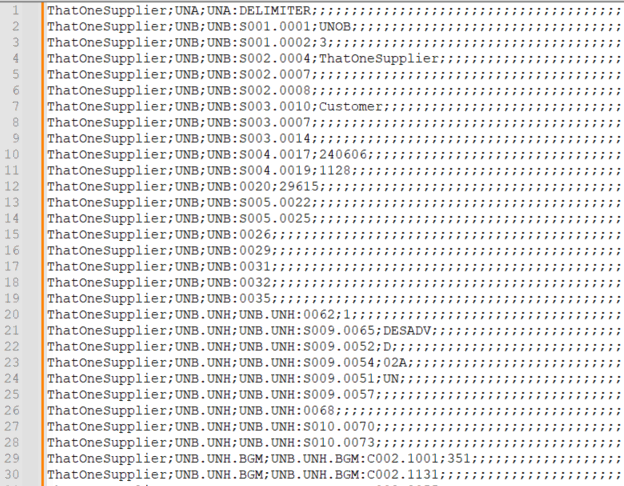

Running that mapping against a folder containing all source files you receive one CSV with all fields and values per source message.

Did I miss a field?

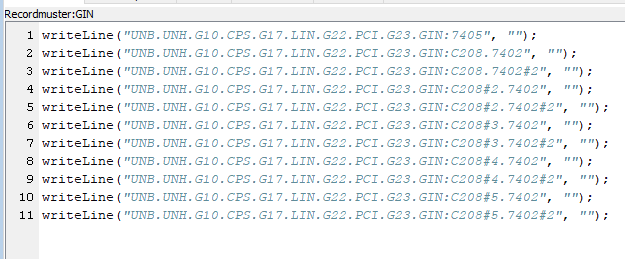

I was a bit worried that I could miss a field from the source structure and in addition I was getting tired of creating the output calls so I wondered if it was possible to create the mapping automatically by another mapping, could call it mapping inception. I did not go full time waste mode but I created the procedure call for every field.

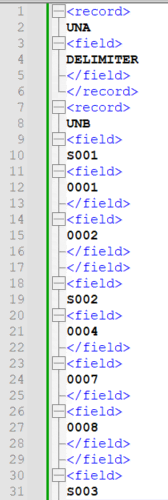

First step was to export the EDIFACT DESADV structure from the BIC Mapping Designer and to flat it out so I could handle it in the second mapping.

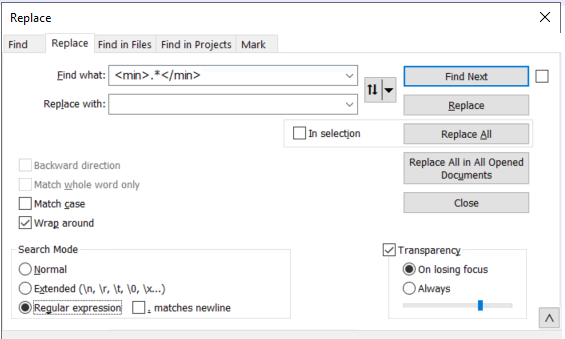

I removed all unneeded XML tags by search and replace in Notepad++ with simple regex logic and in the end it looked like this.

The code itself is not a beauty but it does the job. I used the same universal CSV structure as source without a destination message.

local string$;

local i%;

local j%;

if record?

records$[record_level%] = LINE:S01;

record_level% = record_level% + 1;

record? = false;

endif

if field?

fields$[field_level%] = LINE:S01;

field_level% = field_level% + 1;

field? = false;

endif

if LINE:S01 = "<record>"

traceln("");

record? = true;

did_field? = false;

endif

if LINE:S01 = "<field>"

field? = true;

did_field? = false;

endif

if LINE:S01 = "</record>"

record_level% = record_level% - 1;

did_field? = false;

endif

if LINE:S01 = "</field>"

if did_field?

did_field? = false;

field_level% = field_level% - 1;

else

string$ = "";

for i% = 0 to record_level% - 1

if len(string$) = 0

string$ = records$[i%];

else

string$ = string$ & "." & records$[i%];

endif

next

for j% = 0 to field_level% - 1

if j% = 0

string$ = string$ & ":" & fields$[j%];

else

string$ = string$ & "." & fields$[j%];

endif

next

if string$ = old_string$

old_counter% = old_counter% + 1;

traceln("writeLine(\"" & string$ & "#" & old_counter% & "\", \"\");");

else

traceln("writeLine(\"" & string$ & "\", \"\");");

old_counter% = 1;

endif

copy string$ to old_string$;

field_level% = field_level% - 1;

did_field? = true;

endif

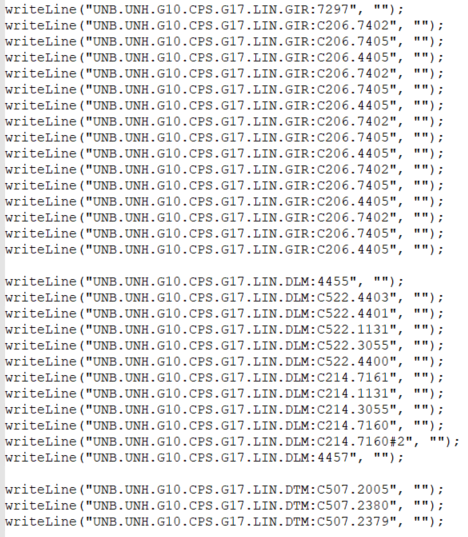

endifThis is the output the mapping traces to the log file. I added a break after every record so it is clear that the coding is for the next record program. Now I had to copy every block into the correct records of the first mapping and I was ready to go.

But!

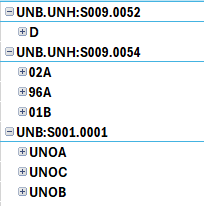

Some groups in the DESADV message occur several times and in these cases I had to manually edit the path.

Analyzing

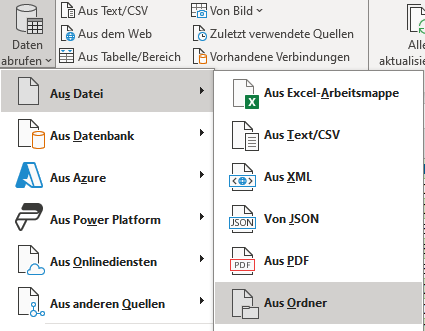

Finally the fun part. Running the mapping against a folder containing all source messages you have one file per source file. To merge all data I simply use the Power Query functions in Excel.

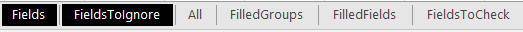

I created two sheets, one containing all fields that have non static values like PO reference, shipment number, ... and replaced the values with a dummy value. This helps me so I don't get all lines with all different numbers. The other sheet contains the fields where I want to check the different options. Which qualifiers are used or which partner roles are used in the NAD segment.

All data preparation takes place in Power Query - remove duplicates, sorting, etc. For the results I created a small pivot table based on the data prepared by Power Query.

For example we wanted to know which EDIFACT version is used by the suppier and if you expand the version you would see which suppliers use which version.